Columnist Jahred Nunes

It was only a few years ago that artificial intelligence and robotics seemed like a little more than a good sci-fi plotline. With the advent of Uber’s self driving delivery truck and new “auto-pilot” modes featured in more and more vehicles, science fiction dreams of chrome and androids are becoming a reality.

According to Pentagon military strategists, the more glamorous and humanitarian side of the future can wait. In an example of true American hospitality, the obvious question posed to artificial intelligence developers was “Can it kill stuff?” prompting Pentagon researchers to dive deep into the development of autonomous weapons.

The United States has put research and development of this new technology at the forefront of its military strategy and just a few weeks ago, tested the first autonomous drone. Looking like little more than the six-rotor commercial drones that can be found on Amazon, this drone was able to navigate a replica Middle Eastern village and identify targets based on their weaponry, even in the dark.

But should these weapons be allowed to decide whether or not to kill? The fact of the matter is developers of this technology have the power to decide the future of warfare, bringing them to what is being called the “Terminator Conundrum.”

Many skeptics of autonomous weapons worry about other developments of this type in other countries. When it comes to the question of foreign policy, The here seems to be no consensus on what actions to take. There is discussion of international treaties against autonomous weapons, and there are suggestions to develop this technology to keep a military edge on US enemies.

Technology such as this would be easy to use in countries such as Russia and North Korea, as both countries often stifle the voice of the people and in turn would have little to no public backlash, making the American decision of what to do with the technology all the more urgent.

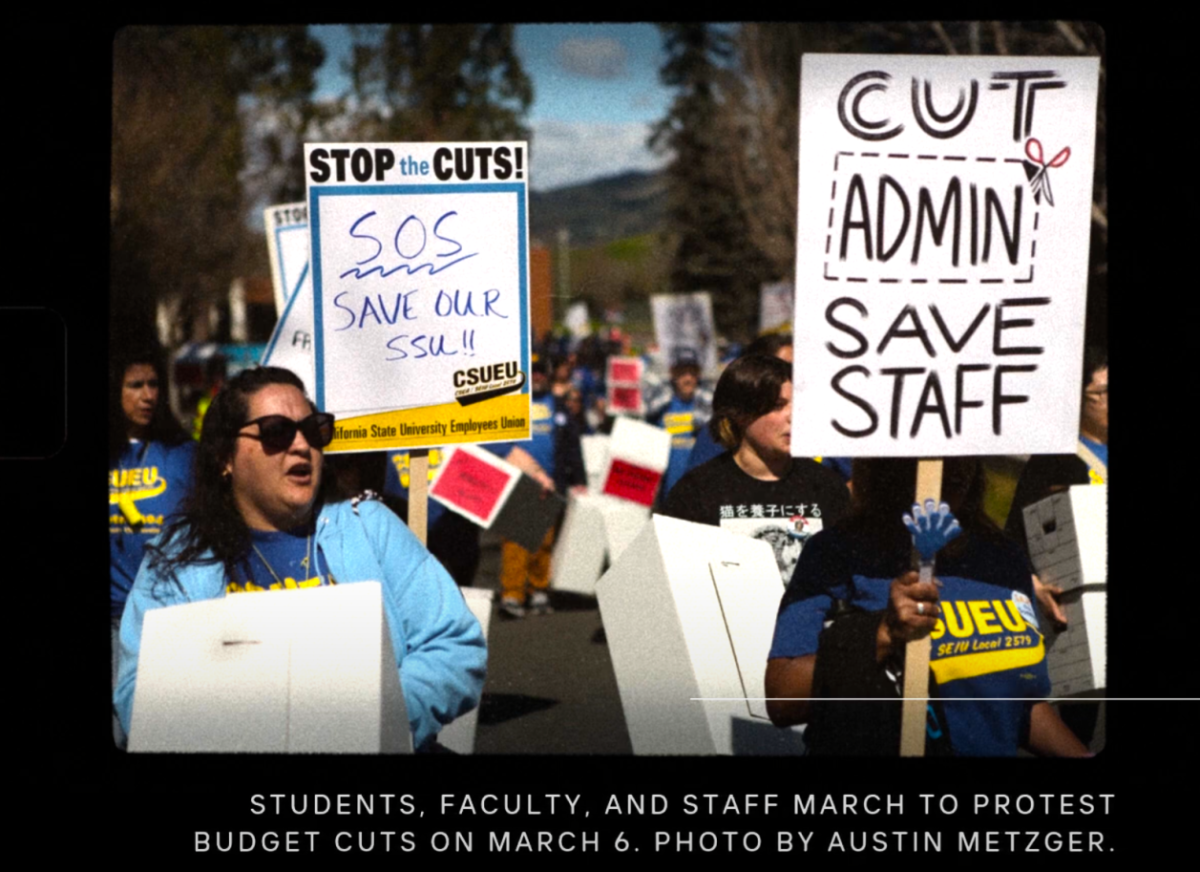

Hilary Swift // The New York Times

Interestingly, support for this technology comes in the predicted number of casualties, or lack thereof. In a test of its facial recognition software developed by American intelligence agencies, it was eerily able to discern friend from foe, even able to recognize a photographer as harmless even when pointing the camera at the drone. Situations such as this have often confused soldiers in the past with fatal results.

To be clear, the drone could not turn itself on and fly away, that responsibility still belongs to humans. However, once in the air, it’s able to decide upon its own targets based on what it’s been told to look for.

This brings up another important question, what does an American enemy look like? Sure, this technology has been able to perform well in pseudo-conditions created by its developers, but does that completely eliminate its margin of error, and what sort of consequences could be suffered for those errors?

The reality is there is only enough information to ask questions at this point. Members of the development team have stated that there will always be a “man in the loop,” meaning no drone will have full independence. According to defense developers, the vision is to create and improve tools for soldiers in what’s called centaur warfighting, referencing the half-man, half-horse of Greek mythos.

Looking throughout history, it’s technological developments such as these that drew America into war, submarine and chemical warfare being prime examples during the World Wars… just some food for thought.